Boring Company Accused of Nearly 800 Environmental Violations in Las Vegas

Elon Musk’s Boring Company is facing serious allegations from Nevada regulators, who claim the company violated environmental rules nearly 800 times during construction of its underground Vegas Loop project. The accusations stem from repeated failures to comply with water pollution control regulations and terms of a previous settlement agreement.

State officials say the violations include unauthorized discharges of groundwater, missed environmental inspections, and failure to properly manage construction runoff. In total, 689 site inspections were reportedly skipped, and the company did not hire an independent environmental manager as required. Additional issues include unpermitted excavation work, spills of tunnel debris onto public roads, and discharges into storm drains.

The Vegas Loop, a privately funded underground transportation system using Tesla vehicles, has been under construction since 2019. It aims to eventually span over 60 miles with more than 100 stations across Las Vegas. Because the project does not rely on federal funding, it has avoided certain environmental reviews and oversight typically required for public infrastructure.

Despite the volume of alleged violations, Nevada regulators have proposed a fine of $242,800 — far less than the potential maximum of over $3 million. Officials say the reduced amount reflects their discretion and a breakdown of penalties across various permits. The company disputes many of the allegations and has not yet been required to pay the fine, pending resolution of the case.

The situation has raised concerns about whether the state’s enforcement is strong enough to ensure environmental compliance. Critics argue that large, well-funded companies may see reduced penalties as a minor cost of doing business, rather than a deterrent. As construction continues, the case highlights growing tension between rapid private development and public environmental accountability.

UK Grants Google Search “Strategic Market Status,” Paving Way for Tighter Oversight

In a landmark move, the UK’s Competition and Markets Authority (CMA) has designated Google Search and its related ad operations with “Strategic Market Status” (SMS). This is the first time such a label has been applied under Britain’s new digital markets regime—opening the door to stricter regulatory control over one of the world’s most dominant tech companies.

The SMS classification stems from findings that Google controls over 90 percent of all general searches in the UK. The CMA says this market dominance gives Google “substantial and entrenched power,” warranting targeted oversight to ensure fair competition. While the designation does not imply wrongdoing, it arms regulators with new tools to impose changes, if needed.

Under the Digital Markets, Competition and Consumers Act, now in force, the CMA can require interventions such as choice screens (offering users alternatives to Google), enshrining fair ranking rules, and imposing limitations on how Google uses publisher content—especially for AI‑generated search summaries. AI‑powered features like “AI Overview” and “AI Mode” are already in scope. The company’s Gemini AI assistant, however, is currently excluded, though that may be revisited.

Google responded by affirming its broad support for the CMA’s goals but warning that overly prescriptive rules could stifle innovation, slow new product launches, and disrupt the UK’s tech ecosystem. In its statement, the company urged regulators to strike a balance between oversight and flexibility.

Supporters of the move—including publishers and consumer advocates—say it could help rebalance power between content creators, advertisers, and platform giants. The designation also arrives amid growing global scrutiny of major tech platforms, particularly over how they use AI and manage dominance in digital markets.

The CMA plans to launch public consultations later this year on how exactly to use its new powers. Either way, the message is clear: Google’s search empire in the UK will no longer operate with unchecked freedom.

Instagram Chief Pushes Back on MrBeast’s AI Concerns, Urges Adaptation

Instagram head Adam Mosseri has responded to concerns raised by popular YouTuber MrBeast about the potential threat of AI to the creator economy, suggesting the fears may be overstated. Speaking at a recent tech event, Mosseri acknowledged the transformative impact of artificial intelligence but argued that it’s unlikely to replace high-production creators like MrBeast, whose content relies heavily on logistics, human interaction, and real-world execution.

Mosseri believes AI will instead democratize content creation, giving smaller creators access to tools that were once out of reach. By reducing the technical and financial barriers to entry, he suggests generative AI will expand creative opportunities for a wider range of people, potentially leveling the playing field.

However, he did not dismiss the risks associated with AI. Mosseri highlighted the growing challenge of misinformation and visual manipulation, warning that AI-generated content could be used for deceptive or harmful purposes. He stressed that society must develop new habits and critical thinking skills to navigate a world where not everything seen in a video or image is real. He called this a necessary cultural shift — one where media literacy becomes a core skill, especially for younger generations.

On the issue of AI transparency, Mosseri acknowledged Instagram’s struggles with labeling AI-generated content. He noted that early efforts led to legitimate videos being flagged due to filters or editing software. Going forward, he emphasized the need for more nuanced solutions, including better context around who is sharing content and why.

While Mosseri disagrees with the idea that AI will destroy the creator economy, he agrees that major shifts are coming. The future, he suggests, depends not on resisting AI, but on adapting to it responsibly and building trust in a rapidly evolving digital landscape.

Google Chrome Cracks Down on Annoying Website Notifications

Google Chrome is rolling out a new set of features aimed at giving users more control over intrusive website notifications. The update targets websites that bombard users with pop-up alerts and marketing messages, often without meaningful engagement or consent.

One of the key changes includes automatic revocation of notification permissions for websites that users no longer visit or interact with. Chrome will quietly disable notifications from sites that have been deemed inactive or spammy, reducing clutter and interruptions without requiring users to take action.

For mobile users, particularly on Android, Chrome is introducing a one-tap “Unsubscribe” button embedded directly into notifications. This feature allows users to instantly disable alerts from annoying websites with a single tap, streamlining the process and making it easier to clean up notification spam.

The browser’s Safety Check tool has also been upgraded to monitor sites that may misuse permissions. If a site is flagged for abuse or hasn’t been visited in a long time, Chrome may automatically revoke its notification access as a precaution.

Importantly, these changes don’t remove user control. Users can opt out of the automatic revocation feature in settings, or manually re-enable notifications for any site they trust or want to stay connected with.

The move is part of Google’s broader effort to improve web usability and cut down on digital noise. For years, users have complained about being overwhelmed by constant prompts asking to “allow notifications,” only to be spammed by promotions, irrelevant news, or scams.

With these updates, Chrome aims to strike a better balance—keeping helpful notifications like reminders and updates, while filtering out the distractions. As websites adjust to the new system, the browsing experience on Chrome could become significantly quieter and more user-friendly.

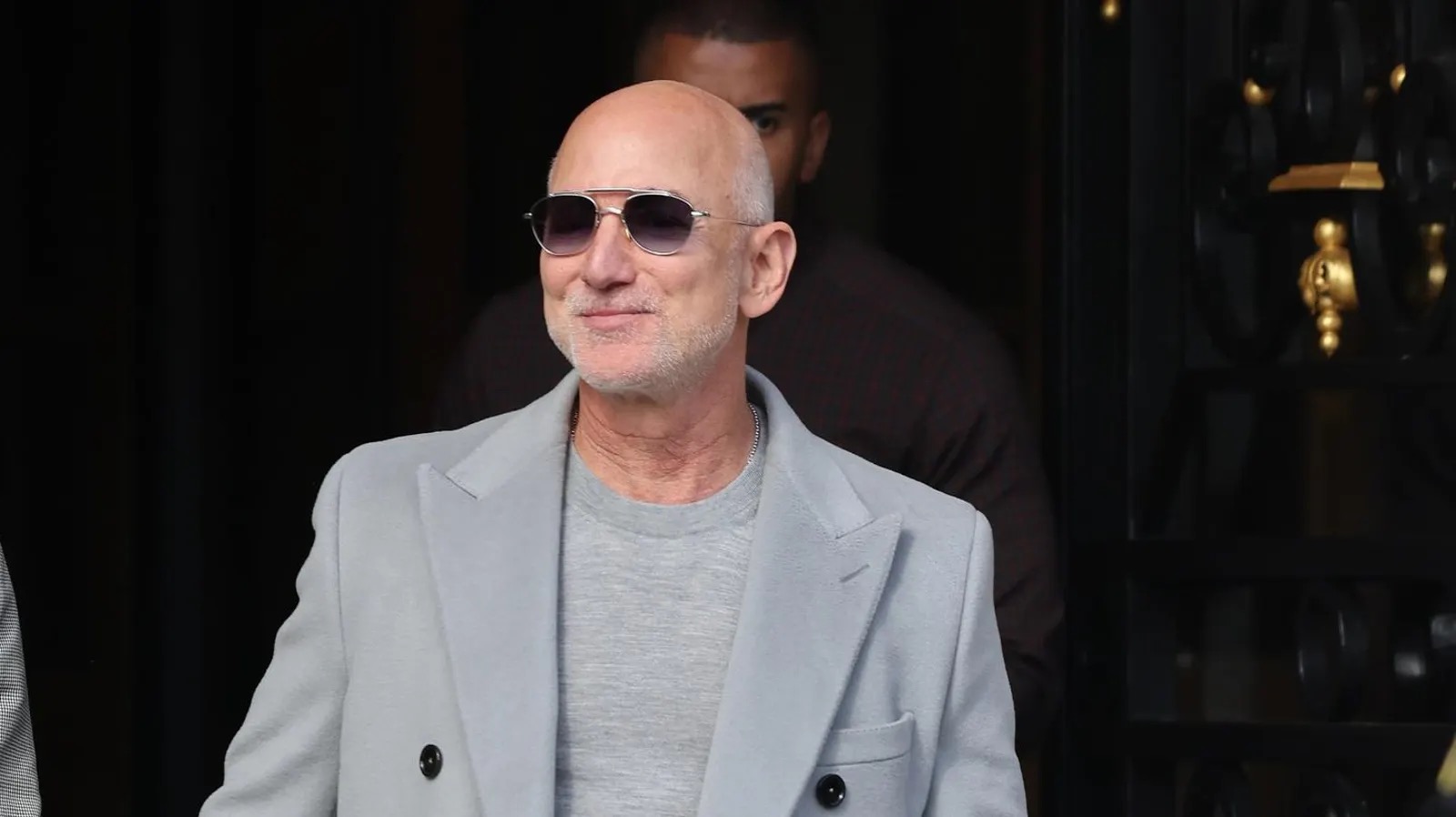

The Fixer’s Dilemma: Chris Lehane and OpenAI’s Impossible Mission

Chris Lehane, a veteran political strategist known for his crisis management skills, has stepped into a crucial role at OpenAI, tasked with navigating one of the most challenging landscapes in tech today. As head of global policy and communications, Lehane faces the daunting mission of balancing OpenAI’s rapid innovation with increasing demands for regulation, transparency, and public trust.

OpenAI is at the forefront of artificial intelligence development, pushing boundaries that raise profound ethical, social, and economic questions. With governments and watchdogs worldwide intensifying scrutiny, Lehane’s job is to smooth tensions, engage with regulators, and build a credible narrative around OpenAI’s ambitions and responsibilities.

However, his role is riddled with contradictions. On one hand, there is immense pressure to impose stronger oversight, ethical standards, and accountability on AI technologies that could transform—or disrupt—society. On the other hand, OpenAI’s competitive edge relies on agility, control over proprietary data, and rapid deployment of cutting-edge models. Too much regulation risks stifling innovation; too little invites public backlash and potential legal battles.

Lehane also must manage geopolitical complexities, as countries compete to lead AI development while grappling with how to regulate it. OpenAI’s leadership argues that excessive domestic restrictions could weaken U.S. companies against less regulated foreign rivals. Meanwhile, critics worry about the company’s outsized influence and question whether self-regulation is enough.

In this delicate dance, Lehane is more than a fixer—he’s a symbol of OpenAI’s broader struggle to align powerful technology with societal expectations. His success depends on finding a middle ground where innovation thrives without compromising safety or public trust. Yet, given the rapid pace of AI evolution and the high stakes involved, it remains an open question whether any individual can resolve the tensions shaping the future of artificial intelligence.