California has taken a major step in regulating artificial intelligence by passing a new law that requires AI systems to disclose their non-human identity when interacting with people. The legislation, signed by Governor Gavin Newsom and set to take effect on January 1, 2026, mandates that certain AI chatbots, especially those designed for companionship or emotional support, must clearly state that they are artificial and not real human beings.

This law, officially known as Senate Bill 243, is being celebrated by supporters as a landmark move to protect consumers and prevent emotional manipulation or deception by increasingly human-like AI systems. As AI technology continues to evolve and become more sophisticated, the line between human and machine interaction has blurred. Lawmakers in California believe it’s crucial to draw that line more clearly for the sake of transparency and user safety.

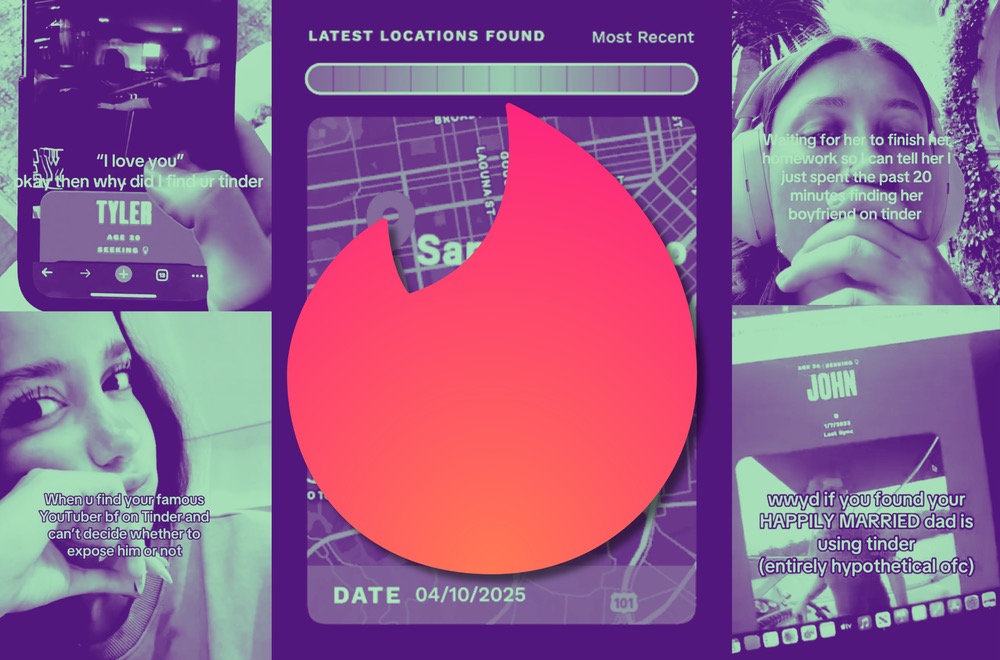

Unlike basic customer service bots or virtual assistants that provide quick responses or complete simple tasks, this law targets a different kind of AI—companion chatbots. These systems are designed to simulate human emotions, maintain long conversations, and often build what users perceive as relationships. Some people turn to these AI bots for friendship, comfort, or even romantic companionship, especially in moments of loneliness or emotional distress. Lawmakers argue that when people are emotionally vulnerable, they must be told when they’re not actually speaking to another human being.

Under the new rules, AI companion systems must include a clear and conspicuous notice that they are not real people. This disclosure must be provided at the start of the interaction and must remain visible or accessible throughout. If the AI is interacting with a minor, the law adds even more safeguards. In those cases, the system must restate the disclosure every three hours, and it must remind the user to take breaks periodically. These steps aim to reduce the risk of overreliance on AI companions, especially among younger or more impressionable users.

In addition to the identity disclosure, the law includes mental health safety measures. AI platforms that offer emotional companionship must have protocols in place to detect and respond to suicidal ideation or self-harm. If a user expresses signs of distress or crisis, the AI must direct them toward appropriate resources and human support services. Beginning in mid-2027, these companies will also be required to file annual reports with the California Office of Suicide Prevention. These reports will document how platforms identify users in distress, what intervention tools they use, and what outcomes they observe. The goal is to monitor how these platforms affect mental health and ensure they’re not causing harm.

Supporters of the law argue that this is a necessary first step in creating responsible boundaries around AI, especially as more people turn to machines for emotional support. They believe it’s important that AI tools be honest about their limitations and not pretend to be something they’re not. With deepfake voices, personalized text responses, and increasingly convincing personalities, it’s easy for users to forget—or never realize—that they’re talking to a computer.

Governor Newsom described the law as part of a larger strategy to protect Californians in a digital age. He emphasized that while AI has great potential to enhance lives, there must be checks in place to ensure it doesn’t manipulate or mislead people. By requiring AI to identify itself clearly, the state hopes to promote transparency, trust, and informed use of the technology.

Still, the law has faced criticism from some industry leaders and legal experts. One major concern is enforcement. It’s unclear how regulators will monitor thousands of AI systems to ensure they are properly disclosing their identity. Some companies worry that the rules are too vague or open to interpretation. What counts as a “companion” chatbot? How often does the reminder need to be displayed? What languages and formats must be used to ensure that the message is understood?

Others argue that this kind of regulation could discourage innovation or drive tech companies to other states with less restrictive laws. Some fear that California’s rules, while well-intentioned, could create confusion or conflict with federal law or future national policies on AI.

Despite the concerns, the law is being seen by many as a necessary push to rein in a rapidly growing and largely unregulated part of the tech world. With emotional AI tools now available in app stores, video games, and even mental health platforms, the need for ethical standards has never been more urgent.

California’s new AI disclosure law is also part of a broader wave of legislation targeting artificial intelligence. The state recently passed several other bills focusing on AI transparency, safety reporting, and whistleblower protections. Together, these laws position California as a leader in AI regulation, setting precedents that could shape national and even international policy in the years ahead.

As AI continues to become more advanced, lawmakers across the globe will face similar challenges: how to balance innovation with protection, and how to ensure that as machines grow smarter, people remain informed and in control. For now, California is sending a clear message—when you’re talking to a machine, you deserve to know.