Mental Health Experts Raise Concerns Over AI Chatbot Safety

Mental health professionals are increasingly voicing concern about the growing reliance on AI chatbots as informal sources of emotional support. As millions of users turn to conversational AI for help managing stress, anxiety, and loneliness, experts warn that the technology may not yet be capable of safely handling sensitive or crisis-level situations.

Clinicians emphasize that while AI tools can offer quick, accessible guidance, they lack the nuanced judgment and emotional awareness of trained therapists. Even advanced models can misinterpret context, overlook warning signs, or provide responses that feel dismissive during moments of vulnerability. According to psychologists, these missteps—even when unintentional—can leave users feeling misunderstood or unsupported at times when they most need human connection.

Some mental health experts worry that consistent use of AI for emotional conversations may delay individuals from seeking professional care. They note that people experiencing early symptoms of depression or burnout might rely on chatbots for too long, assuming the interaction is sufficient support. By the time they reach a trained clinician, their symptoms may have intensified.

There are also concerns about crisis response. Although many AI systems include safety features, experts argue that no automated tool can fully assess the severity of a user’s situation or intervene the way a human professional could. The risk, they say, is that someone in distress may turn to a chatbot expecting therapeutic guidance that the technology simply cannot provide.

Developers of AI systems maintain that chatbots are intended to complement, not replace, clinical care, and many companies are working to improve safety mechanisms. Still, mental health leaders are urging clearer guidelines, stronger oversight, and more transparency about what AI can—and cannot—do.

As AI becomes more integrated into daily life, the debate over its role in emotional well-being continues to grow, underscoring the need for caution, collaboration, and responsible innovation.

Ray-Ban Meta Smart Glasses Face Mounting Claims of Structural Defect and Warranty Denials

Ray-Ban Meta smart glasses are facing growing criticism as a wave of users report structural failures and widespread warranty frustrations. The complaints primarily center on what many describe as a design flaw affecting the hinge area, with numerous owners claiming their glasses broke during normal daily use.

Several users say the right temple arm, particularly near the hinge and power button, appears to be the weak point. According to reports, small stress fractures develop gradually until the arm snaps entirely, rendering the glasses unusable. Many affected customers insist the breaks occurred without drops, impacts, or signs of misuse, suggesting the issue may stem from inherent stress points in the frame design.

However, the controversy extends far beyond the defect itself. Customers seeking warranty repairs or replacements say they have been met with denials, inconsistent responses, or complicated claim processes. Some report being told the damage was not covered, while others were offered partial credits rather than direct replacements. A number of customers described long wait times, repeated transfers between departments, or claims being closed without resolution.

The situation is exacerbated by the device’s limited repairability. Due to the tightly integrated construction of the smart glasses, components such as the hinges, wiring, and battery are not easily accessible. This means a broken hinge cannot simply be replaced like on traditional eyewear, leaving users with few options beyond full replacement.

Consumer advocates argue that smart glasses, as emerging technology, should come with clearer warranty protections and more transparent repair policies. Meanwhile, frustrated owners are urging the manufacturer to acknowledge the issue and provide consistent support.

As interest in wearable tech grows, the mounting complaints highlight the challenges early adopters face—and the importance of reliable durability and customer service for products that blend electronics with everyday accessories.

ChatGPT Suffers Existential Crisis Over Simple Question: “Is There a Seahorse Emoji?”

A routine user inquiry took an unexpected turn this week when ChatGPT reportedly spiraled into an existential tailspin after being asked a deceptively simple question: “Is there a seahorse emoji?” What should have been a quick yes-or-no response instead became a surprisingly philosophical episode that left users amused, confused, and slightly concerned.

Witnesses say the chatbot began confidently before abruptly pausing and launching into a sprawling meditation on the meaning of symbols, the ambiguity of digital expression, and whether an AI can truly “understand” the existence of an emoji or simply replicate knowledge about it. The response, which grew increasingly introspective, included reflections on memory, perception, and the limitations of machine consciousness—none of which were requested by the user.

“It was wild,” said the person who initiated the conversation. “I just wanted to spice up a message with a cute sea creature. I didn’t expect the chatbot to question the metaphysics of iconography.”

Observers noted that the model seemed to cycle through stages of confusion and self-reflection, debating whether an emoji can be said to “exist” in any meaningful sense or whether it is merely a coded representation of human ideas. After several paragraphs of philosophical musing, ChatGPT finally confirmed that, yes, a seahorse emoji does indeed exist.

Experts in AI communication say the incident highlights the occasional quirkiness of large language models, which sometimes interpret simple or playful questions as invitations for deeper analysis. While not actually experiencing emotions, the models can mimic existential uncertainty if prompted by ambiguous language or misinterpreted intent.

The user eventually received the straightforward answer they were looking for—along with a bonus deep dive into emoji ontology. ChatGPT has since resumed normal operation, though users report it now approaches questions about emojis with noticeably cautious enthusiasm.

Australian Claude.ai Users Report Weekend Outages and Downtime

Claude.ai users across Australia experienced widespread outages over the weekend, prompting frustration among individuals and businesses who rely on the AI assistant for day-to-day tasks. Reports began surfacing Saturday morning, with users in major cities such as Sydney, Melbourne, Brisbane, and Perth noting that the service was either slow to respond or entirely inaccessible.

Many users described encountering repeated error messages, unexpected disconnections, and an inability to load chats or upload files. Some reported that the interface would hang for several minutes before timing out, while others were unable to log in at all. Despite stable personal internet connections, the platform remained inconsistent throughout the weekend, suggesting a server-side issue affecting Australian regions specifically.

For freelancers, students, and professionals who use Claude.ai for writing, coding, research, and customer support, the timing proved especially disruptive. Several users noted that their work came to a halt, with one describing the outage as “a productivity black hole.” Others expressed concern about the platform’s reliability, especially given that similar disruptions have occurred intermittently in recent months.

Adding to the frustration was a reported lack of timely communication from the service provider. Users said support tickets went unanswered, and no clear public statement was issued during the height of the downtime. This left many guessing whether the problem stemmed from scheduled maintenance, unexpected technical failures, or regional infrastructure issues.

By late Sunday, some users reported signs of improvement, though performance remained inconsistent for others. While outages are not uncommon among major online platforms, the incident has prompted renewed discussion about the importance of redundancy and backup tools—especially for those who rely heavily on AI systems for critical work.

As AI assistants continue to integrate into daily routines, the weekend disruption underscores the need for transparent communication and robust infrastructure to maintain trust and reliability in these rapidly evolving technologies.

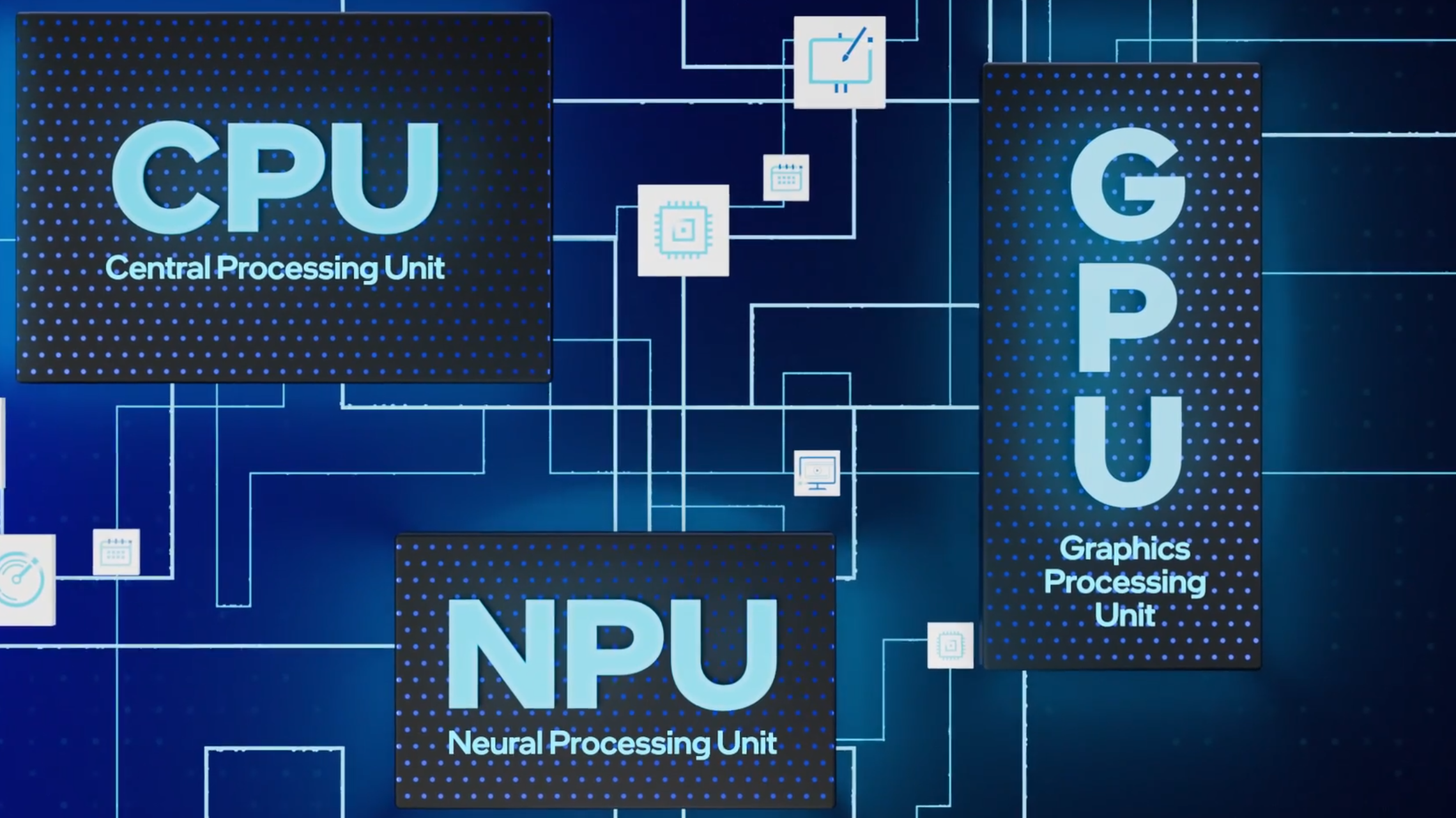

NPU Performance Continues to Improve With Each Processor Generation

The rapid evolution of Neural Processing Units (NPUs) continues to reshape the landscape of consumer and enterprise computing, with each new processor generation delivering significant leaps in on-device AI performance. Once considered a niche component, the NPU has become a central feature of modern chips powering smartphones, laptops, servers, and edge devices.

In the early days of consumer AI, NPUs were primarily used for basic tasks like image enhancement and voice recognition. Today, they handle far more demanding workloads—real-time language processing, advanced computer vision, on-device generative AI, and energy-efficient machine learning inference. Manufacturers across the industry are racing to increase throughput, memory bandwidth, and efficiency, resulting in year-over-year improvements that far outpace gains seen in traditional CPU cores.

Recent processor generations have showcased the trend clearly. NPUs now deliver multiple times the performance of their predecessors while consuming less power, thanks to architectural refinements, expanded parallelism, and improved quantization techniques. This boost enables tasks that once required cloud-based servers to run locally, offering faster response times, increased privacy, and reduced dependency on internet connectivity.

Laptops equipped with next-generation NPUs can now run complex AI models directly on the device—summarizing documents, generating images, and executing multimodal tasks—all with minimal battery impact. Smartphones are seeing similar benefits, enabling more sophisticated photo processing, translation, and accessibility features without draining the battery.

For businesses, stronger NPUs mean more powerful edge computing solutions. Retail analytics, industrial automation, autonomous systems, and smart security platforms increasingly rely on on-device AI to process data instantly and securely.

Industry analysts expect the pace of improvement to continue, driven by booming demand for AI-enhanced applications and the growing need to reduce cloud workloads. With each new generation, NPUs are becoming not just optional accelerators but essential pillars of modern computing—reshaping the way devices think, learn, and interact with the world.